3. Exchange Hybrid Deployment

Exchange Online presents its own set of SLAs, and

it is of interest to us in terms of its interactions with on-premises

Exchange. Assuming that your organization is running in Hybrid mode,

there will be three on-premises points of interaction with Exchange

Online. Specifically, these interaction points are as follows:

- Exchange CAS servers

- Directory synchronization

- Active Directory Federation Services 2.x

Each of these is not highly available by

default because each is deployed on a single server. The only possible

exception for keeping a single server may be directory synchronization,

since it is built as a no-touch software appliance by default, unless

deployed using the full-featured Forefront Identity Manager using a

highly available SQL instance.

Exchange Client Access

Servers providing Exchange Hybrid mode integration may be a subset of

the total number of Client Access Servers contained in your

organization. If more than one of these exists, they will be

load-balanced via some sort of load-balancing mechanism. Client Access

Servers facilitating Exchange Hybrid mode are responsible for the

interaction between Exchange Online and on-premises Exchange and

directly facilitate the features required, which makes the on-premises

system and Office 365 appear as a single organization. With this in

mind, you will do well to ensure that sufficient redundancy exists to

guarantee availability during a server outage, as well as during

periods of high server load.

Active Directory Federation Services (AFDS)

enable external authentication to an on-premises Active Directory by

validating credentials against Active Directory and returning a token

that is consumed by Office 365, thereby facilitating one set of Active

Directory credentials to be used against both on-premises services as

well as Office 365. ADFS servers may have a DMZ-based component (ADFS

Proxy servers) alongside the LAN-based ADFS server. ADFS Proxy servers are a version of ADFS that is specifically designed to be deployed in the DMZ,

a secure network location, disconnected from a production network via

additional layers of firewalls. Since all that these Proxy servers do

is to intercept credentials securely and pass them onto LAN-based ADFS

instances, they may not be required if an equivalent service is

available via Microsoft TMG/UAG or similar.

These types of servers are great

virtualization targets because of their light load. Depending on load,

you will require a minimum of two ADFS servers and two ADFS Proxy

servers.

Your availability concerns for Exchange Online/Hybrid mode include the following:

- Internet connectivity.

- Sufficient ADFS servers.

- Sufficient ADFS Proxy servers (if required).

- Networking (Reverse Proxy, firewall).

- Load balancer.

- Validity of certificates: A server certificate issued by a

third-party certificate provider, which should not be expired. The

certificate may be a SAN certificate or a wildcard certificate.

4. Database Availability Group Planning

Database availability group (DAG) planning

requires you to balance a number of factors. Most of these are

interdependent and require significant thought and planning.

DATABASE SIZING

The theoretical maximum database size should not

be based purely on the maximum database size supported by Exchange

2013. Large databases require longer backup/restore and reseed times,

especially when over the 1 TB mark. Databases size of 1 TB and upward

are impractical to back up, and they should only be considered if

enough database copies exist in order not to require a traditional

backup, specifically three or more copies. You need to strike a balance

between fewer nodes and larger databases versus more nodes and smaller

databases.

DATABASE COPIES

The number of database copies required in order

to meet availability targets is a relatively simple determination.

Early on, we discussed the number of disks or databases required in

order to calculate a specific availability. If we have been given a

stated availability target of 99.99 percent, then we will not be able

to achieve such a target with a single database copy. Four copies

within a datacenter is the minimum number required for a 99.99 percent

availability target. Taking into account the number of databases is

just one of the factors in our availability calculation.

In multi-datacenter scenarios, datacenter

activation is a manual step, as opposed to the automatic failover

provided by high availability. Therefore, switchover requires more time

and incurs more downtime that an automatic failover. While Exchange

2013 is able to automate a switchover event, we would argue that the

business via the administrator initiating the event should wield that

level of control, so that the state of Exchange is always known and

understood.

When the second datacenter uses RAID to

protect volumes on a single server, as opposed to individual servers

with isolated storage, this slightly increases the availability of each

individual volume and therefore slightly increases overall

availability. In the case of three or more database copies, however,

the additional gain will hardly justify the additional costs of

doubling the disk spindles (depending on the RAID model) and the

additional RAID controllers. Applying the principle of failure domains,

it may be cheaper to deploy extra servers with isolated storage, as

opposed to deploying the extra disks and RAID controller per RAID

volume required to achieve higher availability.

DATABASE AVAILABILITY GROUP NODES

The number of DAG nodes is driven not only by the

number of copies required but also by how many nodes are required in a

database availability group in order to maintain quorum. Quorum

is the number of votes required to establish if the cluster has enough

votes to stay up or to make a voting decision, such as mounting

databases. Quorum is calculated as the number of nodes/2 + 1. A

three-node cluster can therefore suffer a single failure and still

maintain quorum. Odd-numbered node sets easily maintain this

mathematical relationship; however, even-numbered node sets require the

addition of a file share witness.

FILE SHARE WITNESS LOCATION

The file share witness is an empty file

share on a nominated server that acts as an extra vote to establish

cluster quorum. Whichever datacenter in which the file share witness is

located may be considered the primary datacenter. In Exchange 2013, the

file share witness may be located in a third datacenter from the

primary and secondary location, thereby eliminating the risk of split brain,

which is a condition that occurs when two datacenters become active for

the same database copy. Changes are now written to different instances

of the same database, which requires considerable effort to undo should

the WAN link between the primary and secondary datacentre break. This

change, while not recommended, is now supported in Exchange 2013, and

it is the first version of Exchange to support the separation of the

file share witness into a third datacenter.

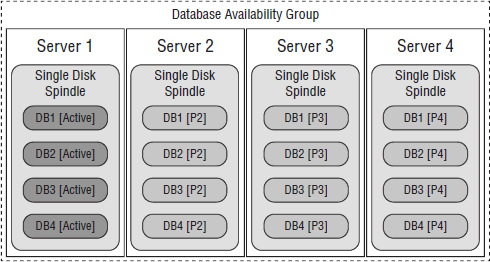

DATABASE DISTRIBUTION

The distribution of databases on database

availability group nodes has a direct impact on performance and

availability. In order to demonstrate this concept, consider a

four-node DAG with four database copies and with all databases active

on Server 1, as shown in Figure 4.

FIGURE 4 Uneven database distribution

Server 1 will serve all of the required

client interactions, while Servers 2, 3, and 4 remain idle, with the

exception of logging replay activity. Assuming Server 1 fails, all

active copies fail with the server and, depending on the health of the

remaining copies, may all activate on Server 2. This is a highly

inefficient distribution structure.

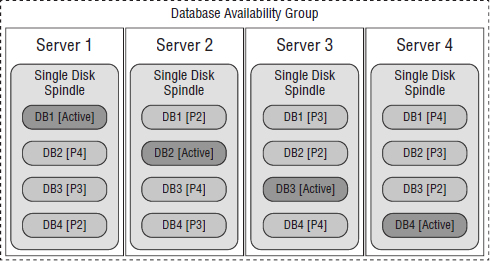

Figure 5

shows how databases are distributed in a manner such that client and

server load is balanced and failure domains are minimized (assuming the

storage is not shared). Note that this symmetry is precalculated on a

current version of the Exchange calculator.

FIGURE 5 Balanced database distribution

DETERMINING QUORUM AND DAC

If you have

DAC mode enabled on your DAG and a WAN failure occurs, both datacenters

will dismount databases in order to prevent split brain. By design, DAC

mode may be the cause of an outage if it is not implemented correctly.

If properly implemented, however, it will act as an extra layer of

quorum against split brain.

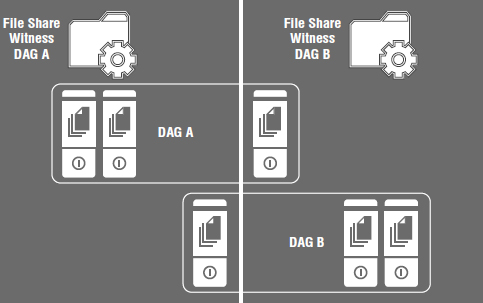

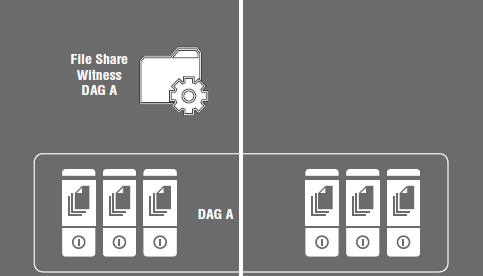

If WAN links are unreliable, and your DAG appears similar to Figure 6, consider planning your DAGs without DAC mode, as per Figure 7.

FIGURE 6 Single DAG with DAC mode

DAGs may be split into two or more DAGs

with either datacenter maintaining quorum if a WAN failure occurs,

similar to what appears in Figure 7.

FIGURE 7 Multiple DAGs without DAC mode