The fundamental design process for an Exchange storage solution normally follows these steps:

- 1. Define requirements (functional specification).

- 2. Perform user profile analysis.

- 3. Complete the Exchange 2013 Server Role Requirements Calculator.

- 4. Select appropriate storage hardware.

- 5. Validate with Jetstress.

- 6. Document proposed solution.

In the early days of Exchange design, it

was rare to see anything other than step 4 and perhaps step 6

practiced, largely because the Exchange 2013 Server Role Requirements

Calculator didn't exist and we didn't have a way to validate the

solution. As Exchange has matured, so too has the design process. More

recently, it is expected that a storage solution is designed to meet

requirements and to avoid overdeploying and operating unnecessary

storage hardware.

Over-deploying storage was a common

practice with Exchange Server 2003 as design teams tried to make up for

performance and architectural limitations within the product.

Generally, this proved unsuccessful since Exchange 2003 was limited by

its underlying 32-bit architecture and the resulting memory

fragmentation that it caused. However, it didn't stop IT departments

trying to make it go faster by using very expensive, high-performance

storage.

Good quality user-profile analysis is

vital to the design process. Attempting to perform Exchange storage

design without having good quality user-profile data and clearly

defined requirements is just costly guesswork.

Requirements Gathering

Service Level The service-level agreement (SLA)

is a contract between two parties that sets out things like how a

specific service will be operated, who is responsible for its

maintenance, what level of performance it will provide, and what level

of availability it will achieve.

From an Exchange storage design perspective, we

are mainly interested in the service level because it drives our

high-availability decisions. If the SLA dictates a high-availability

solution, then it opens up some design choices for storage that we may

not have had otherwise, such as JBOD. JBOD requires a high-availability

deployment, since we must have multiple copies of each database to be

able to recover in a timely fashion once a disk spindle fails. We may

also consider running an Exchange native data protection (backupless)

solution. Exchange native data protection makes use of native Exchange

features, such as multiple database copies within a DAG and lagged

copies, to provide resistance against corruption and component failure

without needing to take point-in-time backups.

If the service level does not require a

high-availability solution, and Exchange will be deployed without a

DAG, then our storage is likely to be based on a RAID solution that can

tolerate disk spindle failure. In addition, we will probably also need

to consider a backup solution, since backupless solutions require

multiple database copies.

The important thing to note here is that simply deploying a massive DAG with JBOD storage is not always

the right thing to do. Consider the system requirements carefully, and

be sure that you understand the impact of your storage design choices

on the rest of the Exchange infrastructure.

User Profile Analysis User profile

analysis is an area of developing design requirements that is too often

rushed and completed poorly. Frequently, this leads to problems later

on in the deployment phase. Fundamentally, user profile analysis

is the way to quantify the system workload required for each mailbox

user. Without good user profile data, you cannot complete any of the

Exchange planning calculators effectively. The common phrase “Garbage

in, Garbage out” fits well here. If you guess at the user profile

values in the Exchange 2013 Server Role Requirements Calculator, then

you might as well guess the predictions too.

Given that we now understand that user profile

data is vital to a quality storage design, what exactly do we need and

how can we get it? Following are the most commonly requested core user

profile metrics for designing Exchange storage:

Average Message Size in KB This is the

average size of items in the user's mailbox. It is used to predict

storage capacity growth, transaction log file generation, dumpster

size, DAG replication bandwidth, and so forth.

Messages Sent per Mailbox per Day This is

an average value of the number of messages sent by an average user on a

daily basis. This value is used to predict workload; that is, how much

are end users actually doing within the Exchange service?

Messages Received per Mailbox per Day This

is basically the same as the previous item, except it is for messages

received. Typically, users will receive many more messages than they

send.

Average Mailbox Size Normally, this is the anticipated average quota size of the deployment. It is used to determine storage capacity requirements.

Third-Party Devices The most common

example of a third-party device that has an impact on Exchange storage

is BlackBerry. These devices can have a significant impact on

your Exchange database I/O, and so it is vital that you speak to your

device vendor to understand the extent of this overhead. Make sure that

you check this for each and every deployment because it changes from

version to version. We often see designs based on the old 3.64

multiplier value for BlackBerry Enterprise Server (BES) that was

specific to Exchange 2003. Exchange 2010 reduced this multiplier to 2;

that is the same as another Outlook client. Exchange 2013 is

anticipated to require roughly the same IOPS multiplier as Exchange

2010. However, at the time of this writing, there is no specific BES

sizing data available for Exchange Server 2013.

It is also vital that you understand what

percentage of your users will have BlackBerry devices and the

percentage of expected growth. In many cases, the root cause of reduced

performance is directly related to an increase in BlackBerry use, which

can easily increase I/O requirements beyond original design targets.

Interestingly, you do not need to scale for most

ActiveSync devices, such as Apple iOS and Windows Phone, since this IO

workload is included in the Exchange 2013 Server Role Requirements

Calculator prediction base formula.

When discussing Exchange storage design,

we are often asked how to obtain user profile values. For Exchange

Server 2003 and Exchange Server 2007, the Exchange Server Profile Analyzer (EPA)

will provide most of this information. However, this tool requires

WebDAV, which was dropped in Exchange Server 2010 and so it will not

work in later versions. Fear not; there is an alternative that is

addressed in this article:

This article explains how to use a script

to parse message-tracking log data in Exchange Server 2010 and Exchange

Server 2013 to derive the important user profile metrics. Nevertheless,

for Exchange Server 2003 or Exchange Server 2007, we still prefer the

data from the Exchange Server Profile Analyzer rather than the

message-tracking log analysis script since experience shows that EPA

provides more accurate user profile values.

The bottom line for user profile metrics

is to understand exactly what the metric is and then figure out a way

to obtain that information in the best and most practical way possible.

This is particularly applicable when migrating from foreign messaging

systems, such as Lotus Domino. There is no easy way to obtain

user profile data from Domino, but it is possible to calculate most

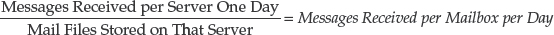

items by estimation. For example, you could use the following formula:

Where user profile data is concerned,

anything is better than making a random guess; that is, never guess

your user profile information without some evidence to back it up.

Always base user profile information on observed data, and record the

process you used to derive it in your design documentation. This is

especially important when you wish to engage in a design review cycle

with a third-party consulting organization, because any good consultant

will want to understand where the numbers came from that you put into

the calculator. If the original source of data for those numbers is not

recorded, it becomes impossible to provide any form of performance

validation for your design.