Exploring Rich Media

These two frameworks encompass more than a dozen new

classes. Although we won’t be able to cover everything in this hour,

we’ll give you a good idea of what’s possible and how to get started.

In addition to these frameworks, we’ll introduce the UIImagePickerController

class. This simple object can be added to your applications to allow

access to the iPhone’s photo library or Camera from within your

application.

Media Player Framework

The Media Player framework

is used for playing back video and audio from either local or remote

resources. It can be used to call up a modal iPod interface from your

application, select songs, and manage playback. This is the framework

that provides integration with all the built-in media features that your

phone has to offer. We’ll be making use of five different classes in

our sample code:

MPMoviePlayerController:

Allows playback of a piece of media, either located on the iPhone file

system or through a remote URL. The player controller can provide a GUI

for scrubbing through video, pausing, fast forwarding, or rewinding.

MPMediaPickerController:

Presents the user with an interface for choosing media to play. You can

filter the files displayed by the media picker or allow selection of

any file from the media library.

MPMediaItem: A single piece of media, such as a song.

MPMediaItemCollection: Represents a collection of media items that will be used for playback. An instance of MPMediaPickerController returns an instance of MPMediaItemCollection that can be used directly with the next class—the music player controller.

MPMusicPlayerController:

Handles the playback of media items and media item collections. Unlike

the movie player controller, the music player works “behind the

scenes”—allowing playback from anywhere in your application, regardless

of what is displayed on the screen.

Of course, many dozens of

methods are available in each of these classes. We’ll be using a few

simple features for starting and stopping playback, but there is an

amazing amount of additional functionality that can be added to your

applications with only a limited amount of coding involved.

By the Way

Just

as a reminder, a modal view is one that the user must interact with

before a task can be completed. Modal views, such as the Media Player’s

iPod interface, are added on top of your existing views using a view’s presentModalViewController method. They are dismissed with dismissModalViewControllerAnimated.

AV Foundation Framework

Although the Media Player

framework is great for all your general media playback needs, Apple

recommends the AV Foundation framework for most audio playback functions

that exceed the 30 seconds allowed by System Sound Services. In

addition, the AV Foundation framework offers audio recording features,

making it possible to record new sound files directly in your

application. This might sound like a complex programming task, but we’ll

do exactly that in our sample application.

You need just two new classes to add audio playback and recording to your apps:

AVAudioRecorder:

Records audio (in a variety of different formats) to memory or a local

file on the iPhone. The recording process can even continue while other

functions are running in your application.

AVAudioPlayer:

Plays back audio files of any length. Using this class, you can

implement game soundtracks or other complex audio applications. You have

complete control over the playback, including the ability to layer

multiple sounds on top of one another.

The Image Picker

The Image Picker (UIImagePickerController) works similarly to the MPMediaPickerController,

but instead of presenting a view where songs can be selected, the

user’s photo library is displayed instead. When the user chooses a

photo, the image picker will hand us a UIImage object based on the user’s selection.

Like the MPMediaPickerController,

the image picker is presented within your application modally. The good

news is that both of these objects implement their own view and view

controller, so there’s very little work that we need to do to get them

to display other than a quick call to presentModalViewController.

As you can see, there’s quite a few things to cover, so let’s get started using these features in a real iPhone application.

Preparing the Media Playground Application

This exercise will be less about creating a real-world application

and more about building a sandbox for testing out the rich media

classes. The finished application will show embedded or fullscreen

video, record and play audio, browse and display images from the photo

library or camera, and browse and select iPod music for playback.

Implementation Overview

There

will be four main components to the application. First, a video player

that plays an MPEG-4 video file when a button is pressed; fullscreen

presentation will be controlled by a toggle switch. Second, we’ll create

an audio recorder with playback features. Third, we’ll add a button

that shows the iPhone photo library or camera and a UIImageView

that displays the chosen photo. A toggle switch will control the image

source. Finally, we’ll provide the user the ability to choose songs from

the iPod library and start or pause playback. The title of the

currently playing song will also be displayed onscreen in a UILabel.

Setting Up the Project Files

Begin by creating a new View-based iPhone Application project in Xcode. Name the new project MediaPlayground.

Within Xcode, open the MediaPlaygroundViewController.h file and update the file to contain the #import directives, outlets, actions, and properties shown in Listing 1.

Listing 1.

#import <UIKit/UIKit.h>

#import <MediaPlayer/MediaPlayer.h>

#import <AVFoundation/AVFoundation.h>

#import <CoreAudio/CoreAudioTypes.h>

@interface MediaPlaygroundViewController : UIViewController

<MPMediaPickerControllerDelegate,AVAudioPlayerDelegate,

UIImagePickerControllerDelegate,UINavigationControllerDelegate> {

IBOutlet UISwitch *toggleFullscreen;

IBOutlet UISwitch *toggleCamera;

IBOutlet UIButton *recordButton;

IBOutlet UIButton *ipodPlayButton;

IBOutlet UILabel *nowPlaying;

IBOutlet UIImageView *chosenImage;

AVAudioRecorder *soundRecorder;

MPMusicPlayerController *musicPlayer;

}

-(IBAction)playMedia:(id)sender;

-(IBAction)recordAudio:(id)sender;

-(IBAction)playAudio:(id)sender;

-(IBAction)chooseImage:(id)sender;

-(IBAction)chooseiPod:(id)sender;

-(IBAction)playiPod:(id)sender;

@property (nonatomic,retain) UISwitch *toggleFullscreen;

@property (nonatomic,retain) UISwitch *toggleCamera;

@property (nonatomic,retain) UIButton *recordButton;

@property (nonatomic, retain) UIButton *ipodPlayButton;

@property (nonatomic, retain) UILabel *nowPlaying;

@property (nonatomic, retain) UIImageView *chosenImage;

@property (nonatomic, retain) AVAudioRecorder *soundRecorder;

@property (nonatomic, retain) MPMusicPlayerController *musicPlayer;

@end

|

Most of this code should

look familiar to you. We’re defining several outlets, actions, and

properties for interface elements, as well as declaring the instance

variables soundRecorder and musicPlayer that will hold our audio recorder and iPod music player, respectively.

There are a few additions

that you might not recognize. To begin, we need to import three new

interface files so that we can access the classes and methods in the

Media Player and AV Foundation frameworks. The CoreAudioTypes.h file is required so that we can specify a file format for recording audio.

Next, you’ll notice that we’ve declared that MediaPlaygroundViewController class must conform to the MPMediaPickerControllerDelegate, AVAudioPlayerDelegate, UIImagePickerControllerDelegate, and UINavigationControllerDelegate protocols.

These will help us detect when the user has finished choosing music and

photos, and when an audio file is done playing. That leaves UINavigationControllerDelegate.

Why do we need this? The navigation controller delegate is required

whenever you use an image picker. The good news is that you won’t need

to implement any additional methods for it!

After you’ve finished the

interface file, save your changes and open the view controller

implementation file, MediaPlaygroundViewController.m. Edit the file to

include the following @synthesize directives after the @implementation line:

@synthesize toggleFullscreen;

@synthesize toggleCamera;

@synthesize soundRecorder;

@synthesize recordButton;

@synthesize musicPlayer;

@synthesize ipodPlayButton;

@synthesize nowPlaying;

@synthesize chosenImage;

Finally, for everything that we’ve retained, be sure to add an appropriate release line within the view controller’s dealloc method:

- (void)dealloc {

[toggleFullscreen release];

[toggleCamera release];

[soundRecorder release];

[recordButton release];

[musicPlayer release];

[ipodPlayButton release];

[nowPlaying release];

[chosenImage release];

[super dealloc];

}

Now, we’ll take a few

minutes to configure the interface XIB file, and then we’ll explore the

classes and methods that can (literally) make our apps sing.

Creating the Media Playground Interface

Open the

MediaPlaygroundViewController.xib file in Interface Builder and begin

laying out the view. This application will have a total of six buttons (UIButton), two switches (UISwitch), three labels (UILabel), and a UIImageView. In addition, we need to leave room for an embedded video player that will be added programmatically.

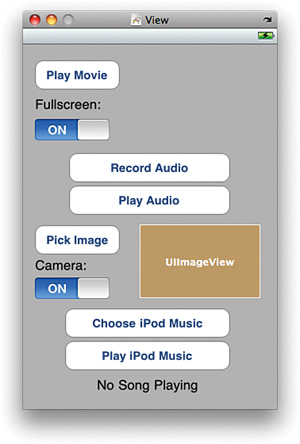

Figure 1

represents a potential design for the application. Feel free to use

this approach with your layout, or modify it to suit your fancy.

Did you Know?

You might want to consider using the Attributes Inspector to set the UIImageView mode to Aspect Fill or Aspect Scale to make sure your photos look right within the view.

Connecting the Outlets and Actions

Finish up the interface

work by connecting the buttons and label to the corresponding outlets

and actions that were defined earlier.

For reference, the connections that you will be creating are listed in Table 1. Be aware that some UI elements need to connect to both an action and an outlet so that we can easily modify their properties in the application.

Table 1. Interface Elements and Actions

| Element Name (Type) | Outlet/Action | Purpose |

|---|

| Play Movie (UIButton) | Action: playMedia: | Initiates playback in an embedded movie player, displaying a video file |

| Fullscreen On/Off Switch (UISwitch) | Outlet: toggleFullscreen | Toggles a property of the movie player, embedding it or presenting the video fullscreen |

| Record Audio (UIButton) | Action: recordAudio:

Outlet: recordButton: | Starts and stops audio recording |

| Play Audio (UIButton) | Action: playAudio: | Plays the currently recorded audio sample |

| Pick Image | Action: chooseImage | Opens a pop-over displaying the user’s photo library |

| Camera On/Off Switch (UISwitch) | Outlet: toggleCamera | Toggles a property of the image selector, presenting either the photo library or camera |

| UIImageView | Outlet: chosenImage | The image view that will be used for displaying a photo from the user’s photo library |

| Choose iPod Music (UIButton) | Action: chooseiPod: | Opens a pop-over displaying the user’s music library for creating a playlist. |

| Play iPod Music (UIButton) | Action: playiPod:

Outlet: ipodPlayButton | Plays or pauses the current playlist |

| No Song Playing (UILabel) | Outlet: nowPlaying | Displays the title of the currently playing song (if any) |

After

creating all the connections to and from the File Owner’s icon, save

and close the XIB file. We’ve now created the basic skeleton for all the

media capabilities we’ll be adding in the rest of the exercise.