Redundant array of inexpensive disks (RAID)

is used to configure a disk subsystem to provide better performance and

fault tolerance for an application. The basic idea behind using RAID is

that you spread data across multiple disk drives so that I/Os are

spread across these drives. RAID has special significance for

database-related applications, where you want to spread random I/Os

(data changes) and sequential I/Os (for the transaction log) across different disk subsystems to minimize disk head movement and maximize I/O performance.

The four significant levels of RAID implementation that are of most interest in database implementations are as follows:

RAID 0 is data striping with no redundancy or fault tolerance.

RAID 1 is mirroring, where every disk in the array has a mirror (copy).

RAID

5 is striping with parity, where parity information for data on one

disk is spread across the other disks in the array. The contents of a

single disk can be re-created from the parity information stored on the

other disks in the array.

RAID 10, or

1+0, is a combination of RAID 1 and RAID 0. Data is striped across all

drives in the array, and each disk has a mirrored duplicate, offering

the fault tolerance of RAID 1 with the performance advantages of RAID 0.

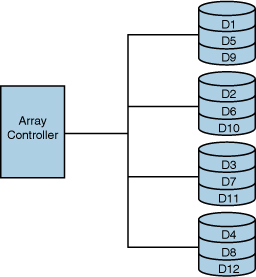

RAID Level 0

RAID Level 0 provides the best I/O performance among

all other RAID levels. A file has sequential segments striped across

each drive in the array. Data is written in a round-robin fashion to

ensure that data is evenly balanced across all drives in the array.

However, if a media failure occurs, no fault tolerance is provided, and

all data stored in the array is lost. RAID 0 should not be used for a

production database where data loss or loss of system availability is

not acceptable. RAID 0 is occasionally used for tempdb to

provide the best possible read and (especially) write performance. RAID

0 is helpful for random read requirements, such as those that occur on tempdb and in data segments.

Tip

Although the data stored in tempdb is temporary and noncritical data, failure of a RAID 0 stripeset containing tempdb results in loss of system availability because SQL Server requires a functioning tempdb to carry out many of its activities. If loss of system availability is not an option, you should not put tempdb on a RAID 0 array. You should use one of the RAID technologies that provides redundancy.

If momentary loss of system availability is acceptable in exchange for the improved I/O and reduced cost of RAID 0, recovery of tempdb is relatively simple. The tempdb database is re-created each time the SQL Server instance is restarted. If the disk that contained your tempdb

was lost, you could replace the failed disk, restart SQL Server, and

the files would automatically be re-created. This scenario is

complicated if the failed disk with the tempdb file also contains your master database or other system databases.

RAID

0 is the least expensive of the RAID configurations because 100% of the

disks in the array are available for data, and none are used to provide

fault tolerance. Performance is also the best of the RAID

configurations because there is no overhead required to maintain

redundant data.

Figure 1 depicts a RAID 0 disk array configuration.

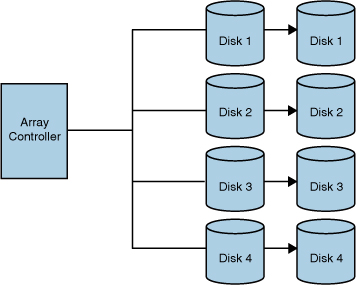

RAID Level 1

With RAID 1, known as disk mirroring, every write to

the primary disk is written to the mirror set. Either member of the set

can satisfy a read request. RAID 1 devices provide excellent fault

tolerance because in the event of a media failure, either on the

primary disk or mirrored disk, the system can still continue to run.

Writes are much faster than with RAID 5 arrays because no parity

information needs to be calculated first. The data is simply written

twice.

RAID 1 arrays are best for transaction logs and

index filegroups. RAID 1 provides the best fault tolerance and best

write performance, which is critical to log and index performance.

Because log writes are sequential write operations and not random

access operations, they are best supported by a RAID 1 configuration.

RAID 1 arrays are the most expensive RAID

configurations because only 50% of total disk space is available for

actual storage. The rest is used to provide fault tolerance.

Figure 2 shows a RAID 1 configuration.

Because

RAID 1 requires that the same data be written to two drives at the same

time, write performance is slightly less than when writing data to a

single drive because the write is not considered complete until both

writes have been done. Using a disk controller with a battery-backed

write cache can mitigate this write penalty because the write is

considered complete when it occurs to the battery-backed cache. The

actual writes to the disks occur in the background.

RAID 1 read performance is often better than that of

a single disk drive because most controllers now support split seeks.

Split seeks allow each disk in the mirror set to be read independently

of each other, thereby supporting concurrent reads.

RAID Level 10

RAID 10, or RAID 1+0, is a combination of mirroring

and striping. It is implemented as a stripe of mirrored drives. The

drives are mirrored first, and then a stripe is created across the

mirrors to improve performance. This should not be confused with RAID

0+1, which is different and is implemented by first striping the disks

and then mirroring.

Many businesses with high-volume OLTP applications

opt for RAID 10 configurations. The shrinking cost of disk drives and

the heavy database demands of today’s business applications are making

this a much more viable option. If you find that your transaction log

or index segment is pegging your RAID 1 array at 100% usage, you can

implement a RAID 10 array to get better performance. This type of RAID

carries with it all the fault tolerance (and cost!) of a RAID 1 array,

with all the performance benefits of RAID 0 striping.

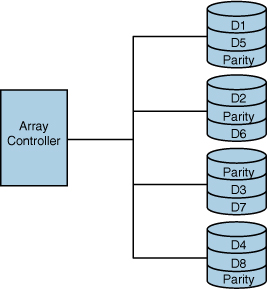

RAID Level 5

RAID

5 is most commonly known as striping with parity. In this

configuration, data is striped across multiple disks in large blocks.

At the same time, parity bits are written across all the disks for a

given block. Information is always stored in such a way that any one

disk can be lost without any information in the array being lost. In

the event of a disk failure, the system can still continue to run (at a

reduced performance level) without downtime by using the parity

information to reconstruct the data lost on the missing drive.

Some arrays provide “hot-standby” disks. The RAID

controller uses the standby disk to rebuild a failed drive

automatically, using the parity information stored on all the other

drives in the array. During the rebuild process, performance is

markedly worse.

The fault tolerance of RAID 5 is usually sufficient,

but if more than one drive in the array fails, you lose the entire

array. It is recommended that a spare drive be kept on hand in the

event of a drive failure, so the failed drive can be replaced quickly

before any other drives fail.

Note

Many of the RAID solutions available today support

“hot-spare” drives. A hot-spare drive is connected to the array but

doesn’t store any data. When the RAID system detects a drive failure,

the contents of the failed drive are re-created on the hot-spare drive,

and it is automatically swapped into the array in place of the failed

drive. The failed drive can then be manually removed from the array and

replaced with a working drive, which becomes the new hot spare.

RAID 5 provides excellent read performance but

expensive write performance. A write operation on a RAID 5 array

requires two writes: one to the data drive and one to the parity drive.

After the writes are complete, the controller reads the data to ensure

that the information matches (that is, that no hardware failure has

occurred). A single write operation causes four I/Os on a RAID 5 array.

For this reason, putting log files or tempdb on a RAID 5

array is not recommended. Index filegroups, which suffer worse than

data filegroups from bad write performance, are also poor candidates

for RAID 5 arrays. Data filegroups where more than 10% of the I/Os are

writes are also not good candidates for RAID 5 arrays.

Note that if write performance is not an issue in

your environment—for example, in a DSS/data warehousing environment—you

should, by all means, use RAID 5 for your data and index segments.

In any environment, you should avoid putting tempdb on a RAID 5 array. tempdb typically receives heavy write activity, and it performs better on a RAID 1 or RAID 0 array.

RAID 5 is a relatively economical means of providing

fault tolerance. No matter how many drives are in the array, only the

space equivalent to a single drive is used to support fault

tolerance. This method becomes more economical with more drives in the

array. You must have at least three drives in a RAID 5 array. Three

drives would require that 33% of available disk space be used for fault

tolerance, four would require 25%, five would require 20%, and so on.

Figure 3 shows a RAID 5 configuration.

Note

Although the recommendations for using the various

RAID levels presented here can help ensure that your database

performance will be optimal, reality often dictates that your optimum

disk configuration might not be available. You may be given a server

with a single RAID 5 array and told to make it work. Although RAID 5 is

not optimal for tempdb or transaction logs, the write performance can be mitigated by using a controller with a battery-backed write cache.

If possible, you should also try to stripe

database activity across multiple RAID 5 arrays rather than a single

large RAID 5 array to avoid overdriving the disks in the array.