2. Multiple data files

A

common discussion point on database file configuration is based on the

number of data files that should be created for a database. For

example, should a 100GB database contain a single file, four 25GB

files, or some other combination? In answering this question, we need

to consider both performance and manageability.

Performance

A

common performance-tuning recommendation is to create one file per CPU

core available to the database instance. For example, a SQL Server

instance with access to two quad-core CPUs should create eight database

files. While having multiple data files is certainly recommended for

the tempdb database, it isn't necessarily required for user databases.

The one file per CPU core suggestion is useful in avoiding allocation contention issues. Each database file holds an allocation bitmap used for allocating space

to objects within the file. The tempdb database, by its very nature, is

used for the creation of short-term objects used for various purposes.

Given tempdb is used by all databases within a SQL Server instance,

there's potentially a very large number of objects being allocated each

second; therefore, using multiple files enables contention on a single

allocation bitmap to be reduced, resulting in higher throughput.

It's

very rare for a user database to have allocation contention. Therefore,

splitting a database into multiple files is primarily done to enable

the use of filegroups and/or for

manageability reasons.

Manageability

Consider

a database configured with a single file stored on a 1TB disk partition

with the database file currently 900GB. A migration project requires

the database to be moved to a new server that has been allocated three

500GB drives. Obviously the 900GB file won't fit into any of the three

new drives. There are various ways of addressing this problem, but

avoiding it by using multiple smaller files is arguably the easiest.

In

a similar manner, multiple smaller files enable additional flexibility

in overcoming a number of other storage-related issues. For example, if

a disk drive is approaching capacity, it's much easier (and quicker) to

detach a database and move one or two smaller files than it is to move

a single large file.

Transaction log

As

we've covered earlier, transaction log files are written to in a

sequential manner. Although it's possible to create more than one

transaction log file per database, there's no benefit in doing so.

Some

DBAs create multiple transaction log files in a futile attempt at

increasing performance. Transaction log performance is obtained through

other strategies we've already covered, such as using dedicated disk

volumes, implementing faster disks, using a RAID 10 volume, and

ensuring the disk controller has sufficient write cache.

For

both transaction logs and data files, sizing the files correctly is

crucial in avoiding disk fragmentation and poor performance.

3. Sizing database files

One

of the major benefits of SQL Server is that it offers multiple features

that enable databases to continue running with very little

administrative effort, but such features often come with downsides. One

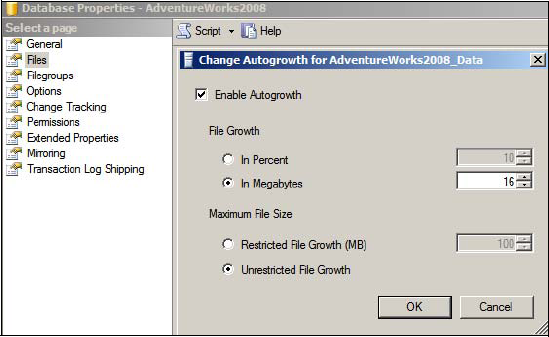

such feature, as shown in figure 2, is the Enable Autogrowth option, which enables a database file to automatically expand when full.

The

problem with the autogrowth feature is that every time the file grows,

all activity on the file is suspended until the growth operation is

complete. If enabled, instant initialization (covered shortly) reduces

the time required for such actions, but clearly the better alternative

is to initialize the database files with an appropriate size before the

database begins to be used. Doing so not only avoids autogrowth

operations but also reduces disk fragmentation.

Consider

a worst case scenario: a database is created with all of the default

settings. The file size and autogrowth properties will be inherited

from the model database, which by default has a 3MB data file set to

autogrow in 1MB increments and a 1MB log file with 10 percent

autogrowth increments. If the database is subjected to a heavy

workload, autogrowth increments will occur every time the file is

increased by 1MB, which could be many

times per second. Worse, the transaction log increases by 10 percent

per autogrowth; after many autogrowth operations, the transaction log

will be increasing by large amounts for each autogrowth, a problem

exacerbated by the fact that transaction logs can't use instant

initialization.

In

addition to appropriate presizing, part of a proactive database

maintenance routine should be regular inspections of space usage within

a database and transaction log. By observing growth patterns, the files

can be manually expanded by an appropriate size ahead of autogrowth

operations.

Despite

the negative aspects of autogrowth, it's useful in handling unexpected

surges in growth that can otherwise result in out-of-space errors and

subsequent downtime. The best use of this feature is for emergencies

only, and not as a replacement for adequate presizing and proactive

maintenance. Further, the autogrowth amounts should be set to

appropriate amounts; for example, setting a database to autogrow in 1MB

increments isn't appropriate for a database that grows by 10GB per day.

Given its unique nature, presizing database files is of particular importance for the tempdb database.

Tempdb

The

tempdb database, used for the temporary storage of various objects, is

unique in that it's re-created each time SQL Server starts. Unless

tempdb's file sizes are manually altered, the database will be

re-created with default (very small) file sizes each time SQL Server is

restarted. For databases that make heavy use of tempdb, this often

manifests itself as very sluggish performance for quite some time after

a SQL Server restart, with many autogrowth operations required before

an appropriate tempdb size is reached.

To

obtain the ideal starting size of tempdb files, pay attention to the

size of tempdb once the server has been up and running for enough time

to cover the full range of database usage scenarios, such as index

rebuilds, DBCC operations, and user activity. Ideally these

observations come from load simulation in volume-testing environments

before a server is commissioned for production. Bear in mind that any

given SQL Server instance has a single tempdb database shared by all

user databases, so use across all databases must be taken into account

during any load simulation.

One other aspect you should consider when sizing database files, particularly when using multiple files, is SQL Server's proportional fill algorithm.

Proportional fill

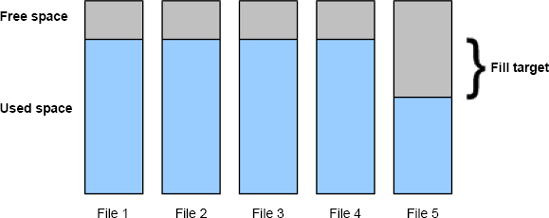

When

a database filegroup (covered shortly) uses multiple data files, SQL

Server fills each file evenly using a technique called proportional fill, as shown in figure 3.

If

one file has significantly more free space than others, SQL Server will

use that file until the free space is roughly the same as the other

files. If using multiple database files in order to overcome allocation

contention, this is particularly important and care should be taken to

size each database file the same and grow each database file by the

same amount.

4. Instant initialization

In versions of SQL Server prior to 2005, files were zero padded

on creation and during manual or autogrowth operations. In SQL Server

2005 and above, the instant initialization feature avoids the need for

this process, resulting in faster database initialization, growth, and

restore operations.

Other

than reducing the impact of autogrowth operations, a particularly

beneficial aspect of instant initialization is in disaster-recovery

situations. Assuming a database is being restored as a new database,

the files must first be created before the data can be restored; for

recovering very large databases, creating and zero padding files can

take a significant amount of time, therefore increasing downtime. In

contrast, instant initialization avoids the zero pad process and

therefore reduces downtime, the benefits of which increase linearly

with the size of the database being restored.

The

instant initialization feature, available only for data files (not

transaction log files), requires the SQL Server service account to have

the Perform Volume Maintenance Tasks privilege. Local Admin accounts

automatically have this privilege, this isn't recommended from a least privilege perspective; therefore,

you have to manually grant the service account this permission to take

advantage of the instant initialization feature.

Earlier

in the section we explored the various reasons for using multiple data

files for a database. A common reason for doing so is to enable us to

use filegroups.