In the second part of the

tutorial, we’ll be adding audio recording and playback to the

application. Unlike the movie player, we’ll be using classes within the

AV Foundation framework to implement these features. As you’ll learn,

very little coding needs to be done to make this work!

For the recorder, we’ll use the AVAudioRecorder class and these methods:

initWithURL:settings:error: Provided with an NSURL instance pointing to a local file and NSDictionary containing a few settings, this method returns an instance of a recorder, ready to use.

record: Begins recording.

stop: Ends the recording session.

Not coincidentally, the playback feature, an instance of AVAudioPlayer, uses some very similar methods:

initWithContentsOfURL:error: Creates an audio player object that can be used to play back the contents of the file pointed to by an NSURL object

play: Plays back the audio

When you were entering the

contents of the MediaPlayground.h file a bit earlier, you may have

noticed that we slipped in a protocol: AVAudioPlayerDelegate. By conforming to this protocol, we can implement the method audioPlayerDidFinishPlaying:successfully:,

which will automatically be invoked when our audio player finishes

playing back the recording. No notifications needed this time around!

Adding the AV Foundation Framework

To use the AVAudioPlayer and AVAudioRecorder

classes, we must add the AV Foundation framework to the project.

Right-click the Frameworks folder icon in the Xcode project, and choose

Add, Existing Frameworks. Select the AVFoundation.framework, and then

click Add.

Watch Out!

Remember, the framework also requires a corresponding import line in your header file (#import <AVFoundation/AVFoundation.h>) to access the classes and methods. We added this earlier when setting up the project.

Implementing Audio Recording

To add audio recording, we need to create the recordAudio:

method, but before we do, let’s think through this a bit. What happens

when we initiate a recording? In this application, recording will

continue until we press the button again.

To implement this functionality, the “recorder” object itself must persist between calls to the recordAudio: method. We’ll make sure this happens by using the soundRecorder instance variable in the MediaPlaygroundViewController class (declared in the project setup) to hold the AVAudioRecorder object. By setting the object up in the viewDidLoad method, it will be available anywhere and anytime we need it. Edit MediaPlaygroundViewController.m and add the code in Listing 1 to viewDidLoad.

Listing 1.

1: - (void)viewDidLoad {

2: NSString *tempDir;

3: NSURL *soundFile;

4: NSDictionary *soundSetting;

5:

6: tempDir=NSTemporaryDirectory();

7: soundFile=[NSURL fileURLWithPath:

8: [tempDir stringByAppendingString:@"sound.caf"]];

9:

10: soundSetting = [NSDictionary dictionaryWithObjectsAndKeys:

11: [NSNumber numberWithFloat: 44100.0],AVSampleRateKey,

12: [NSNumber numberWithInt: kAudioFormatMPEG4AAC],AVFormatIDKey,

13: [NSNumber numberWithInt: 2],AVNumberOfChannelsKey,

14: [NSNumber numberWithInt: AVAudioQualityHigh],AVEncoderAudioQualityKey,

15: nil];

16:

17: soundRecorder = [[AVAudioRecorder alloc] initWithURL: soundFile

18: settings: soundSetting

19: error: nil];

20:

21: [super viewDidLoad];

22: }

|

Beginning with the basics, lines 2–3 declare a string, tempDir, that will hold the iPhone temporary directory (which we’ll need to store a sound recording), a URL, soundFile, which will point to the sound file itself, and soundSetting, a dictionary that will hold several settings needed to tell the recorder how it should be recording.

In line 6, we use NSTemporaryDirectory() to grab and store the temporary directory path where your application can store its sound find.

Lines 7–8 concatenate "sound.caf" onto the end of the temporary directory. This string is then used to initialize a new instance of NSURL, which is stored in the soundFile variable.

Lines 10–15 create an NSDictionary

object that contains keys and values for configuring the format of the

sound being recorded. Unless you’re familiar with audio recording, many

of these might be pretty foreign sounding. Here’s the 30-second summary:

AVSampleRateKey: The number of audio samples the recorder will take per second.

AVFormatIDKey: The recording format for the audio.

AVNumberofChannelsKey: The number of audio channels in the recording. Stereo audio, for example, has two channels.

AVEncoderAudioQualityKey: A quality setting for the encoder.

By the Way

To learn more about the

different settings, what they mean, and what the possible options are,

read the AVAudioRecorder Class Reference (scroll to the “Constants”

section) in the Xcode developer documentation utility.

Watch Out!

The audio format specified in

the settings is defined in the CoreAudioTypes.h file. Because the

settings reference an audio type by name, you must import this file: (#import <CoreAudio/CoreAudioTypes.h>).

Again, this was completed in the initial project setup, so no need to make any changes now.

In lines 17–19, the audio recorder, soundRecorder, is initialized with the soundFile URL and the settings stored in the soundSettings dictionary. We pass nil

to the error parameter because we don’t (for this example) care whether

an error occurs. If we did experience an error, it would be returned in

a value passed to this parameter.

Controlling Recording

With the soundRecorder allocated and initialized, all that we need to do is implement recordAudio: so that the record and stop methods are invoked as needed. To make things interesting, we’ll have the recordButton change its title between Record Audio and Stop Recording when pressed.

Add the following code in Listing 2 to MediaPlaygroundViewController.m.

Listing 2.

1: -(IBAction)recordAudio:(id)sender {

2: if ([recordButton.titleLabel.text isEqualToString:@"Record Audio"]) {

3: [soundRecorder record];

4: [recordButton setTitle:@"Stop Recording"

5: forState:UIControlStateNormal];

6: } else {

7: [soundRecorder stop];

8: [recordButton setTitle:@"Record Audio"

9: forState:UIControlStateNormal];

10: }

11: }

|

In line 2, the method checks the title of the recordButton variable. If it is set to Record Audio, the method uses [soundRecorder record] to start recording (line 3), and then, in lines 4–5, sets the recordButton title to Stop Recording. If the title doesn’t read Record Audio, then we’re already in the process of making a recording. In this case, we use [soundRecorder stop] in line 7 to end the recording and set the button title back to Record Audio in lines 8–9.

That’s it for recording! Let’s implement playback so that we can actually hear what we’ve recorded!

Controlling Audio Playback

To play back the audio that we recorded, we’ll create an instance of the AVAudioPlayer class, point it at the sound file we created with the recorder, and then call the play method. We’ll also add the method audioPlayerDidFinishPlaying:successfully: defined by the AVAudioPlayerDelegate protocol so that we’ve got a convenient place to release the audio player object.

Start by adding the playAudio: method, in Listing 3, to MediaPlaygroundViewController.m.

Listing 3.

1: -(IBAction)playAudio:(id)sender {

2: NSURL *soundFile;

3: NSString *tempDir;

4: AVAudioPlayer *audioPlayer;

5:

6: tempDir=NSTemporaryDirectory();

7: soundFile=[NSURL fileURLWithPath:

8: [tempDir stringByAppendingString:@"sound.caf"]];

9:

10: audioPlayer = [[AVAudioPlayer alloc]

11: initWithContentsOfURL:soundFile error:nil];

12:

13: [audioPlayer setDelegate:self];

14: [audioPlayer play];

15: }

|

In lines 2–3, we define

variables for holding the iPhone application’s temporary directory and a

URL for the sound file—exactly the same as the record.

Line 4 declares the audioPlayer instance of AVAudioPlayer.

Lines 6–8 should look familiar because once again, they grab and store the temporary directory and use it to initialize an NSURL object, soundFile, that points to the sound.caf file we’ve recorded.

In lines 10 and 11, the audio player, audioPlayer, is allocated and initialized with the contents of soundFile.

Line 13 is a bit out of the ordinary, but nothing too strange. The setDelegate method is called with the parameter of self. This tells the audioPlayer instance that it can look in the view controller object (MediaPlaygroundViewController) for its AVAudioPlayerDelegate protocol methods.

Line 14 initiates playback using the play method.

Handling Cleanup

To handle releasing the AVAudioPlayer instance after it has finished playing, we need to implement the protocol method audioPlayerDidFinishPlaying:successfully:. Add the following method code to the view controller implementation file:

(void)audioPlayerDidFinishPlaying:

(AVAudioPlayer *)player successfully:(BOOL)flag {

[player release];

}

We get a reference to the player we allocated via the incoming player parameter, so we just send it the release message and we’re done!

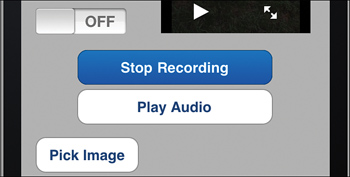

Choose Build and Run in Xcode

to test recording and playback. Press Record Audio to begin recording.

Talk, sing, or yell at your iPhone. Touch Stop Recording, as shown in Figure 1, to end the recording. Finally, press the Play Audio button to initiate playback.

It’s time to move on to the next part of this hour’s exercise: accessing and displaying photos from the photo library.