Standardization

The most

successful implementations of SMS, or any other software distribution

tool, historically have been in standardized environments. Standardizing

operating systems, patch levels, application loads, hardware, and

overall configuration allows administrators to test their deployments

before rolling them out to the enterprise. Hardware-independent imaging,

only recently a reality, allows administrators to build a single image

and inject drivers on the fly during the deployment process. This

process allows you to use one image for any number of hardware

manufacturers and models, creating the most standardized OS deployment

process available today.

Inventory allows

administrators to logically group systems by any combination of hardware

and software. This inventory data is useful in defining deviations from

the standards created by an organization. Inventory data allows

organizations to see the software installed on client systems, and

software metering allows organizations to see the software executed on

client systems. Analyzing these two sets of data helps organizations

make educated decisions regarding software deployments and licensing.

Operating system

deployments and migrations allow organizations to standardize their

environment, thus controlling installations and configurations at the

operating system and application level. Historically, operating system

deployments have been a manual and time-consuming process, making it

extremely difficult for large organizations to standardize their

environment. Now operating system deployments are easier than ever,

allowing levels of standardization that previously were out of reach due

to technology, resources, and expense.

Remote Management

Information

Technology has increasingly become a top expense in today’s

organizations. The ability to resolve issues quickly after they are

identified requires tools that the first tier of support (usually

referred to as Tier1)

can use to perform tasks as though they were at the end user’s system.

These tools are critical to managing and lowering TCO of IT assets.

Tools such as

Remote Control, Remote Desktop, and Remote Assistance give

administrators the ability to run commands, see the hardware and

software installed, and above all see the user interface the user is

seeing. This type of remote control support has become an industry

standard for remote user support. Branch offices and micro-branch

offices, which have fewer than 10 users, have become increasingly common

as corporations change and grow to stay competitive. These business

models further complicate the remote management issue, making site

visits not an option and remote tools critical to the support of the

environment.

Software Distribution

Deploying software is one

of the most primary functions of any Information Technology group.

Getting users the applications, updates, security patches, and other

changes required to keep an environment running is critical to a

business’s success. For any company to stay agile in today’s market, IT

must be able to adapt and meet the business’s needs in a timely,

efficient, and inexpensive manner. Being able to deploy software to

systems, regardless of location and user’s knowledge or rights of a

system, is critical.

There are two primary

methods to get a software update to a system—push and pull. Each of

these methods ultimately gives the targeted systems the software desired

for roll out, but there are many differences between the two and

neither is necessarily right all the time.

Pulling Software

“Pulling” implies

that the owner or recipient of the package must initiate some action.

This allows the recipient to initiate the change when it is convenient,

thus minimizing the impact on productivity. Allowing a user to pull down

an application and install it at his or her leisure is also useful for

rolling out software with user-specific settings the recipient needs to

specify during installation. Even on locked-down systems where users

have no rights, the end user can initiate and execute software

installations as long as they were set up and approved by the IT

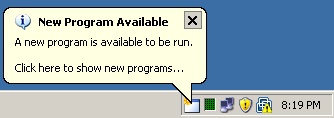

administrative staff. Figure 1 illustrates the end-user notification that a program is available for installation.

The

problem with a software change sent out in a “pull” fashion is that

without that user initiation, the change never occurs. You can see how

this will be a problem where there are changes that must take place on

systems due to company policy. This could also be something a user may

not want to do, such as removing a personal application from a company

asset. This type of application distribution typically takes place when

the users are very self-sufficient and avoiding negative impact to the

end user is important.

Pushing Software

“Pushing” software

speaks to the process of forcing changes to be rolled out in the

enterprise. Pushing does not require user interaction, and it can be

executed like policy. In fact, pushing software assumes there is no

requirement for user interaction, although a need for user interaction

with the installation routine does not prohibit an administrator from

pushing it out. You can push software whether or not a user is logged on

to a system, unlike a pull, where the user must be logged on to

initiate the change. This ability to kick off a change on systems with

no one logged on addresses multiple items:

It eliminates file-locking issues

It removes disruption to the end user’s productivity

Systems may be shut down afterward, allowing the user starting up the computer to complete a reboot cycle.

Installation duration is not an issue because there is no user impact.

Systems may be woken up using Wake On LAN technology to kick off an installation.

Users are unable to interrupt the installation.

To Pull or Push

Although neither

of these methods of applying changes solves all scenarios, it is easy to

see how each can be used in conjunction with the other to address all

types of requirements for change, configuration, and release management.

Minimizing Impact on the Network Infrastructure

Networks are critical

to the daily functions of corporations today. Due to the heavy demand

placed on networks, ConfigMgr utilizes several concepts and technologies

to minimize the impact it places on the network infrastructure. Many of

these technologies can be configured centrally, allowing other

administrators to leverage the ConfigMgr architecture without needing

deep knowledge of the enterprise’s network infrastructure. Technologies

such as BITS, distribution points, branch distribution points, download

and execute, senders, and compressed XML are just a few of the

technologies available.

BITS—

BITS monitors traffic in and out of the local network interfaces and

throttles itself throughout a download, utilizing only the available

idle bandwidth. This minimizes impact to the user experience with other

network-aware applications, such as Microsoft Outlook or Internet

Explorer.

As

users’ demand increases for more data from their applications across

the network, BITS throttles itself down; as the demand drops, BITS

throttles itself up to use as much bandwidth as available.

Distribution Points is

important to understand that you can place distribution points anywhere,

and it is not unusual to have multiple DPs on a single subnet! This is a

common architectural design if there is a high demand to run packages

from the distribution point or a large number of clients. A common

design is to implement multiple DPs in a single location when there are

concerns about router contention or high switch traffic.

Branch Distribution Points gives ConfigMgr the ability to push a package across a WAN

link to a location with a very small number of clients and only traverse

the WAN link one time with the package. SMS 1.x through 2003 pushed a

separate package over the WAN link for every client in the remote

location. Although third-party software existed to address this SMS

architectural deficiency, it was costly and not well known. Now with

support available for a client workstation to be a distribution point,

the branch office or microbranch office model can easily be targeted and

have packages pushed to it.

Whereas ordinary distribution points have packages published (also referred to as copied or pushed)

to them by the ConfigMgr administrator, branch distribution points

receive their package copy by updating their policy and then downloading

the package source using BITS. This pull process is the opposite of how packages are ordinarily published to conventional distribution points.

Download and Execute—

Download and Execute is a feature introduced in SMS 2003; it leverages

the BITS technology to cache a copy of the package from the DP. Many

people ask, “Why would I want to cache a copy of a package if I have a

local DP?”

The reason is

quite simple: When packages are assigned, they are mandatory and will

launch at the time stated in the advertisement. Because Kerberos tickets

are time sensitive, clocks on computer systems today all synchronize

with the clock on the PDC Emulator DC. This means that all clients with a

mandatory advertisement will start it at exactly the same time, thus

placing a heavy load on the local distribution point. The Download and

Execute capability gives administrators the ability for clients to cache

a copy of the package and upon execution, perform the installation from

their local hard drive. BITS allows administrators to configure many

things about its behavior, including how long to hold the copy of the

package in its cache.

Senders—

Senders allow the throttling and prioritization of traffic by time

between ConfigMgr sites. Administrators often overlook configuring

senders, leaving the default of allowing all traffic at all times of the

day. Without configuring senders, there is no throttling, and the

network infrastructure may be utilized by traffic that could have waited

until nonpeak hours of the day. Whenever parent/child relationships exist, you should experiment with senders to determine what is right for the environment in regard to time of day and workload.

Inventory—

Even at the inventory level, there are changes allowing a more

efficient use of the technology now available. Hardware and software

inventories are generated by their respective agents, with the output

created in XML. This XML file is then compressed and sent up to the MP

immediately. If the MP is not available, the client caches the inventory

until the next time it is available or until the next inventory

schedule, where it is rerun.

Testing—

With all this technology available to tread the network lightly, it is

important to experiment with these settings in a lab environment. It is

not uncommon for administrators to make mistakes that will saturate WAN

links due to the nature of the product. Test whenever the ability exists

to affect a network in a negative way, confirming in advance that the

experience will be a positive one. Experimenting with different site

configurations, package pushes, and client package execution can

determine the overall load on the site, network, and distribution

points.

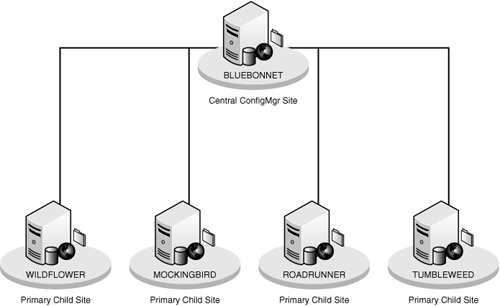

Creating a lab

for ConfigMgr is not only necessary for validating the site hierarchy

and load testing, but it can also be used for package, security, patch,

and upgrade testing. The

lab incorporates a central site server (Bluebonnet) and four primary

child site servers:

Wildflower— DAL site

Mockingbird— HOU site

Roadrunner— BEJ site

Tumbleweed— BXL site