Along with index maintenance and backups,

executing regular DBCC checks are very straightforward for small

databases, or those with large maintenance windows. In an ideal world,

every night would see a full backup, all indexes completely rebuilt,

along with full CHECKDB operations. The reality, however, is

quite different. Large databases, particularly those in use 24/7, don't

have the luxury of a nightly maintenance plan like the one just

described.

1. Running against backups

One

of the ways of offloading the overhead of a DBCC check is running the

check on a restored copy of a production database in a test

environment. If a page is corrupted on the source database, it will be

corrupted on the restored copy. Not only is this a valid approach in

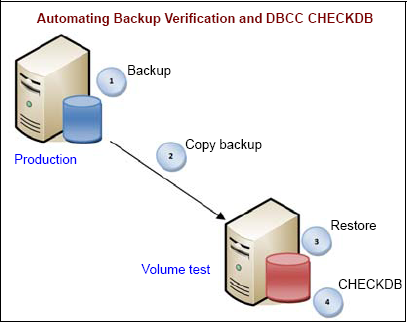

offloading the impact of the DBCC check operation, automating this process, as shown in figure 1,

enables a number of best practices to be handled in one process;

verifying backups, performing DBCC checks, and maintaining a recent

database copy for disaster recovery and/or reporting purposes.

The

one (minor) downside of this method is when corruption is actually

found in the restored copy. At this point, the DBCC check would need to

be performed on the production database to confirm that the corruption

exists and that it wasn't introduced by the copy or restore process.

Assuming no test environment exists for the purposes of offloading CHECKDB impact from production, one of the other options is using the WITH PHYSICAL_ONLY option, discussed next.

2. WITH PHYSICAL_ONLY

DBCC CHECKDB performs a number of logical and physical checks. By specifying the WITH PHYSICAL_ONLY

option, you can reduce the runtime substantially by performing a

reduced set of checks that excludes the extensive logical checks.

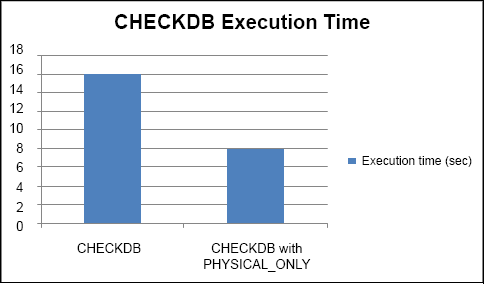

As the example in figure 2 shows, running DBCC CHECKDB against the AdventureWorks2008 database with and without the PHYSICAL_ONLY

option highlights a significant difference in the execution time.

Performing the full check takes roughly double the amount of time

compared to running CHECKDB using the WITH PHYSICAL_ONLY

option (SQL Server was restarted before each test). Although this was a

simple test against a small database, it's easy to see how such time

savings would be considerable against a much larger database.

When executed with the PHYSICAL_ONLY option, CHECKDB reads each database page and checks the allocation consistency via CHECKALLOC. Further, if page checksums are present, it will validate those as well. Using page checksums in conjunction with PHYSICAL_ONLY reduces the production impact of CHECKDB while giving reasonable assurance that the I/O system is behaving itself and not causing any corruption.

|

Need to know when CHECKDB last completed without finding any corruption? After enabling trace flag 3604 with DBCC TRACEON (3604);DBCC PAGE (dbname, 1, 9, 3); replacing dbname with the required database name. The date and time of the last successful corruption-free CHECKDB will be listed in the dbi_dbccLastKnownGood field. run

|

Despite the suitability of this option for production use, I recommended that you run a full CHECKDB when possible. A common maintenance design is for nightly PHYSICAL_ONLY checks with a full CHECKDB scheduled weekly, or alternatively, a nightly PHYSICAL_ONLY check combined with a weekly restore and CHECKDB verification in a load-testing or backup verification environment.

Another option for reducing the impact of DBCC is implementing a more granular verification approach, covered next.

3. Partitioned and granular checks

One of the alternatives to running CHECKDB is to run CHECKTABLE for a subset of tables, spreading the load over a number of nights—for example, running CHECKTABLE on three tables per night over seven nights rather than 21 tables in one night. On one (or more) of these nights, CHECKALLOC and CHECKCATALOG can also be run. Like CHECKDB, the CHECKTABLE command also takes the PHYSICAL_ONLY option, so the impact can be reduced further.

The DBCC CHECKFILEGROUP

command also presents opportunities for granular checking by enabling

checks on large databases to occur one filegroup at a time. In some

cases, particular filegroups may be infrequently modified, or may be

read only. In such cases, excluding these filegroups from the checks,

or running them less frequently, may enable the runtime to be cut

substantially.

|

By default, if multiple CPUs are available to a SQL Server instance and the MAXDOP setting allows, DBCC CHECKDB, CHECKTABLE, and CHECKFILEGROUP will use parallel checking. To disable parallel checking, use trace flag 2528, or manually adjust the MAXDOP setting for the period of the DBCC operation.

|

In closing this section, let's consider one final technique for controlling DBCC activity: user-defined snapshots.

4. User-defined snapshots

A database snapshot uses sparse file technology to create a

near-instant, read-only copy of a database. The snapshot is initially

empty with all page reads redirected to the base database. When the

original database is modified, the page to be modified is copied to the

snapshot before the modification occurs. The snapshot therefore

represents the database at the time of the snapshot. Over time, as more

and more of the database is modified, the snapshot becomes larger and

larger; the maximum size is the size of the database at the time of the

snapshot.

DBCC CHECK* commands use a special hidden database snapshot to ensure the operation runs against a transactionally consistent copy

of the database that is accurate as at the time of the DBCC execution.

This process is an improvement over earlier DBCC checks that used

either table locks or complex transaction log operations that sometimes

led to false positives.

When

running a DBCC check against a database that's receiving updates, each

of the pages to be modified must be copied to the snapshot to ensure

DBCC views the page in its pre-update form. The more updates that occur

during the DBCC check, the larger the snapshot will be. By default, the

snapshot files are created in the same disk location as the database

files. If the number of updates causes the snapshot to grow to the

point where there isn't enough disk space for the snapshot files, the

DBCC check will fail after running out of disk space.

Apart from running DBCC checks during periods of low activity or using the TABLOCK

option (which will block user activity), running DBCC checks against a

user-defined snapshot allows the snapshot files to be placed in a

location with the appropriate amount of free disk space. This ensures

that concurrent updates won't grow the snapshot to consume all the

available disk space on the disk(s) storing the database files.

Further, placing the snapshot on a physically separate disk may assist

in isolating the snapshot-related disk overhead, therefore reducing the

performance impact to users.