SQL Server 2008 introduced a new data compression

feature that is available in Enterprise and Datacenter Editions. Data

compression helps to reduce both storage and memory requirements as the

data is compressed both on disk and when brought into the SQL Server

data cache.

When compression is enabled and data is written to

disk, it is compressed and stored in the designated compressed format.

When the data is read from disk into the buffer cache, it remains in its

compressed format. This helps reduce both storage requirements and

memory requirements. It also reduces I/O because more data can be stored

on a data page when it’s compressed. When the data is passed to another

component of SQL Server, however, the Database Engine then has to

uncompress the data on the fly. In other words, every time data has to

be passed to or from the buffered cache, it has to be compressed or

uncompressed. This requires extra CPU overhead to accomplish. However,

in most cases, the amount of I/O and buffer cache saved by compression

more than makes up for the CPU costs, boosting the overall performance

of SQL Server.

Data compression can be applied on the following database objects:

As the DBA, you need to evaluate which of the

preceding objects in your database could benefit from compression and

then decide whether you want to compress it using either row-level or

page-level compression. Compression is enabled or disabled at the object

level There is no single option you can enable that turns compression

on or off for all objects in the database. Fortunately, other than

turning compression on or off for the preceding objects, you don’t have

to do anything else to use data compression. SQL Server handles data

compression transparently without your having to re-architect your

database or your applications.

Row-Level Compression

Row-level compression isn’t true data compression.

Instead, space savings are achieved by using a more efficient storage

format for fixed-length data to use the minimum amount of space

required. For example, the int data type uses 4 bytes of storage regardless of the value stored, even NULL. However, only a single byte is required to store a value of 100. Row-level compression allows fixed-length values to use only the amount of storage space required.

Row-level compression saves space and reduces I/O by

Reducing the amount of metadata required to store data rows

Storing

fixed-length numeric data types as if they were variable-length data

types, using only as many bytes as necessary to store the actual value

Storing CHAR data types as variable-length data types

Not storing NULL or 0 values

Row-level data compression provides less compression

than page-level data compression, but it also incurs less overhead,

reducing the amount of CPU resources required to implement it.

Row-level compression can be enabled when creating a table or index or using the ALTER TABLE or ALTER INDEX commands by specifying the WITH (DATA_COMPRESSION = ROW) option. The following example enables row compression on the titles table in the bigpubs2008 database:

ALTER TABLE titles REBUILD WITH (DATA_COMPRESSION=ROW)

Additionally, if a table or index is partitioned, you can apply compression at the partition level.

When row-level compression is applied to a table, a new row format is used that is unlike the standard data row format discussed previouslywhich has a fixed-length data section separate from a variable-length data section .

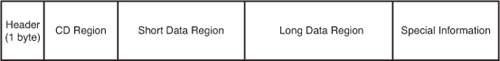

This new row format is referred to as column descriptor, or CD, format.

The name of this row format refers to the fact the every column has

description information contained in the row itself. Figure 1

illustrates a representative view of the CD format (a definitive view

is difficult because, except for the header, the number of bytes in each

region is completely dependent on the values in the data row).

The row header is always 1 byte in length and contains information similar to Status Bits A in a normal data row:

Bit 0— This bit indicates the type of record (1 = CD record format).

Bit 1— This bit indicates whether the row contains versioning information.

Bits 2–4—

This three-bit value indicates what kind of information is stored in

the row (such as primary record, ghost record, forwarding record, index

record).

Bit 5— This bit indicates whether the row contains a Long data region (with values greater than 8 bytes in length).

Bits 6 and 7— These bits are not used.

The CD region consists of two parts. The first is

either a 1- or 2-byte value indicating the number of short columns (8

bytes or less). If the most significant bit of the first byte is set to 0, it’s a 1-byte field representing up to 127 columns; if it’s 1,

it’s a 2-byte field representing up to 32,767 columns. Following the

first 1 or 2 bytes is the CD array. The CD array uses 4 bits for each

column in the table to represent information about the length of the column. A bit representation of 0 indicates the column is NULL. A bit representation of the values 1 to 9 indicates the column is 0 to 8 bytes in length, respectively. A bit representation of 10 (0xa)

indicates that the corresponding column value is a long data value and

uses no space in the short data region. A bit representation of 11 (0xb) represents a bit column with a value of 1, and a bit representation of 12 (0xc)

indicates that the corresponding value is a 1-byte symbol representing a

value in the page compression dictionary (the page compression

dictionary is discussed next in the page-level compression section).

The short data region contains each of the short data

values. However, because accessing the last columns can be expensive if

there are hundreds of columns in the table, columns are grouped into

clusters of 30 columns. At the beginning of the short data region, there

is an area called the short data cluster array.

Each entry in the array is a single byte, which indicates the sum of

the sizes of all the data in the previous cluster in the short data

region; the value is essentially a pointer to the first column of the

cluster (no row offset is needed for the first cluster because it starts

immediately after the CD region).

Any data value in the row longer than 8 bytes is

stored in the long data region. This can include LOB and row-overflow

pointers. Long data needs an actual offset value to allow SQL Server to

locate each value. This offset array looks similar to the offset array

used in the standard data row structure. The long data region

consists of three parts: an offset array, a long data cluster array, and

the long data. The long data cluster array is similar to the short data

cluster array; it has one entry for each 30-column cluster (except for

the last one) and serves to limit the cost of locating columns near the

end of a long list of columns.

The special information section at the end of the row

contains three optional pieces of information. The existence of any or

all of this information is indicated by bits in the first 1-byte header

at the beginning of the row. The three special pieces of information are

Forwarding pointer— This pointer is used in a heap when a row is forwarded due to an update .

Back pointer— If the row is a forwarded row, it contains a pointer back to the original row location.

Versioning information— If snapshot isolation is being used, 14 bytes of versioning information are appended to the row.